A super common first usecase we see at Credal, is a very simple for Retrieval Augmented Generation called something like the “Benefits Buddy” - an AI tool for HR teams, that helps answer employee questions about the company’s HR policies. Specialized HR Software can often be really hard to get through procurement teams: although HR is a vital function that supports the entire business, it can be hard to quantify ROI for finance teams or justify complex vendor reviews for external vendors handling sensitive HR data. Meanwhile, free ai tools often lack the data protection, security or governance functionality that HR teams typically need, given the complexity of the data they handle, especially when the general concerns or risks around sensitive data being used in Artificial Intelligence training models are considered.

But like any highly operational team, HR needs to adopt AI. HR Professionals therefore are learning to how to configure their own tooling on top of existing, horizontal platforms, that allow them to accelerate their work through an AI assistant without having to onboard specific HR software.

Among the many HR tasks that occupy the HR departments' time, is answering employee questions about benefit policies. This is something Generative AI can do really well provided it actually knows about a company's policies, and so HR Leaders are looking for ways to accelerate their HR teams using Gen AI. Implementing such tools can not only speed up employee onboarding but also dramatically improve the perception of HR service delivery at an organization.

The first thing that you’re going to need, to answer your user’s questions about your HR knowledge base, is a HR knowledge base! Of course, general purpose AI tools like GPT-4 don’t yet know anything about your HR policies, PTO, Parental leave, etc, and so some source material or documentation for them to refer to, so they can get the exact right answers to each question is critical.

Most companies we speak to are using either Confluence, Sharepoint Sites or Notion to manage this. In fact, there’s a very stark line we see at which below a certain size, a company is almost certain to be using Notion, and above it, almost certain to be using Confluence

So the first order of business will be to get your data into one of these. Although popular with startups for its carefully crafted UI, Notion still has a lot of rough edges for its Enterprise Customers, especially around the way permissions are (not) exposed in the API. If you Confluence and Sharepoint Sites have slightly less friendly UIs, but Atlassian and Microsoft are obviously both extremely popular software providers to Enterprises. At Credal, we’re a pretty happy customer of Atlassian for JIRA and Confluence, but I still have pretty mixed feelings about Azure and Office.

Now you’ll need to a way for the AI to refer to your knowledgebase when answering user questions. We discussed this a little in the Security Chatbot section, but typically most companies choose to load up their data into a VectorDB so they can run semantic search on it. That’s not the only possible way though, so if you’re interested in learning about whether and why to take this approach, have a read through our previous entry.

As usual, you’ll want to pick from one of the many VectorDBs out there on the market today: MongoDB and Pinecone are probably the two most “Enterprise Ready” offerings today, but great options also exist in Weaviate, QDrant, PGVector and more. Stay tuned for a specialized “VectorDB” guide.

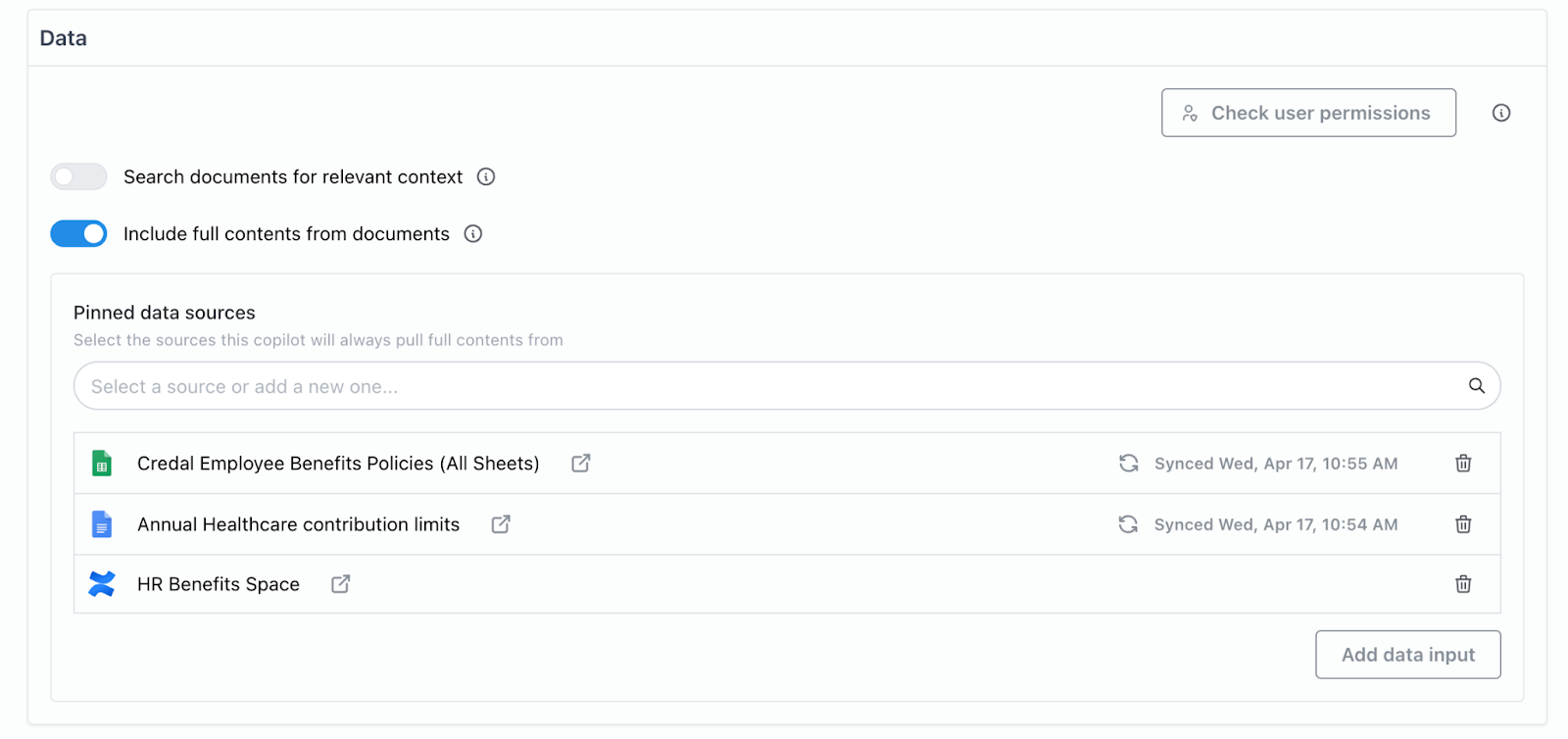

In Credal, this process is as simple as filling out 3 fields in a form:

Now most companies will of course have a lot more than just their HR Space in their Confluence Account. So when your configuring what data to connect to your copilot in Credal, you’d just choose the specific Confluence spaces you care about. Sometimes there may a couple spreadsheets, documents or other resources that you want to link from other sources, like Google Drive, Box etc, and Credal makes it really easy to hook those up too.

Of course if you’re connecting your entire Google Drive, or Confluence Space to an LLM chatbot, you’ll need to make sure that any permissions you have set up in those places are automatically respected by the chatbot (more on this later)

At this point, most enterprises have either:

So now you’ve got your VectorDB in place, you can hook it up to the chat interface wrapper. If you’re using a No-Code tool (Credal supports both “Engineered” AI Applications and “Point & Click” AI Applications).

Since you probably can’t use a Slack Channel as your UI for an HR bot answering sensitive questions (since many questions will need to be asked privately), your demo will likely need some kind of UI, but luckily, there are many easy AI chat interfaces you can either procure or just get from the Open Source community. Credal comes with a built in chat interface as well, which accounts for about 20% of our users’ access pattern:

To get an MVP out there, and start to get some usage from real users, we probably just need to add in a little bit of control over how the AI operates and responds. We'll want citations, the ability to steer the AI with reference FAQ examples, and ironclad guarantees that sensitive data are not making it into AI training models.

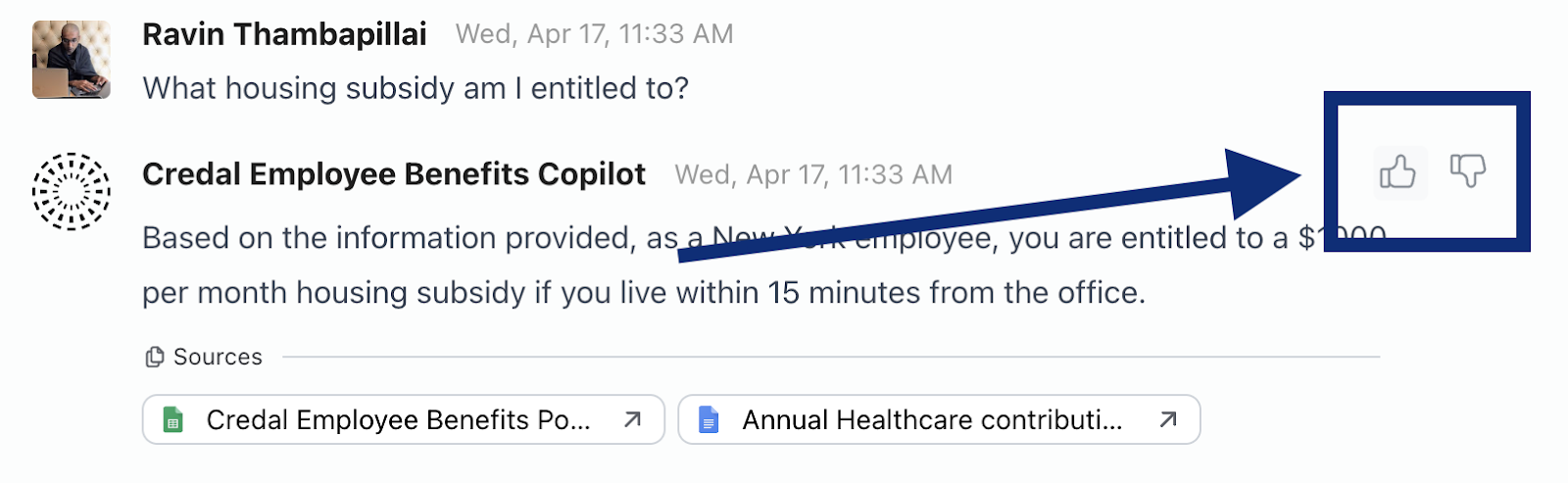

We obviously don’t want our HR AI giving blatantly incorrect information, but more subtly, we probably want our users to be able to refer back to the specific source documentation from which its answer was drawn. So the next step is to make sure that when we ingest the content from Confluence, we can grab the underlying information as well. That gives us a way to point the user to the underlying source of information so they can trust the response.

The example above shows how we think about this at Credal, linking out to the specific underlying source of information so that the end user can review and evaluate for themselves.

Secondly, the HR managers or subject-matter-experts (in this case likely the HR person responsible for the benefits program), needs a way to steer and control the responses. Ideally, they should be able to review the outputs, determine which results were good (or bad) and make sure the bad ones are corrected for future questions and make sure the good ones are used as a reference example when similar questions come up. In the long run, we’ll need to ensure that we can expire, delete or edit these reference answers, but for the sake of shipping an MVP quickly, you might want to skip some of that and solve those problems once the application has some traction at your organization.

At Credal, we provide both APIs and UI elements (for those customers who are using Credal’s UI to serve their copilots) to submit feedback on any given response. The Subject Matter Expert can review the feedback, and mark certain responses as “good” or “bad”, which we can then feed back into the prompt so that the next end user can get a better response next time. You might even want to seed some example questions if you know what the most commonly asked questions are!

The HR team or Benefits expert can steer the LLM by providing this feedback, ensuring that good, relevant previous responses are used to guide the AI, and that bad previous responses are not, without feeding any sensitive data into the AI learning algorithms.

Of course, over time, the ‘right’ answers will change, and so you want to be able to edit, curate, and improve those answers as time goes by.

Credal guarantees sensitive company data is protected in a variety of ways: we automatically redact PII before it leaves the organization's boundaries, we ensure Zero Day Retention policies are in place with third party models, and we provide API exportable Audit Logs that help an organization monitor its usage of AI in great detail. HR needs on sensitive data can be especially acute, because HR handles a lot of PII, and often even PHI, which should be carefully monitored or controlled before sending out to a third party.

Great, at this point, it might make sense to start rolling out to a few pilot users, get initial feedback, see what’s working and not, and start the work of getting to great.

Here’s where it starts to get much more complicated. Here are a few characteristics of the best and/or most sophisticated versions of these HR bots we’ve seen:

The way we think about this at Credal, assumes that the majority of the time, you’re going to want to inherit the permissions of the underlying source system. If you have to build that level of permissions integration in house, that can get really complicated, and so Credal helps make it extremely easy to inherit those permissions and use them in any application you choose to build, for the specific sources you want to connect.

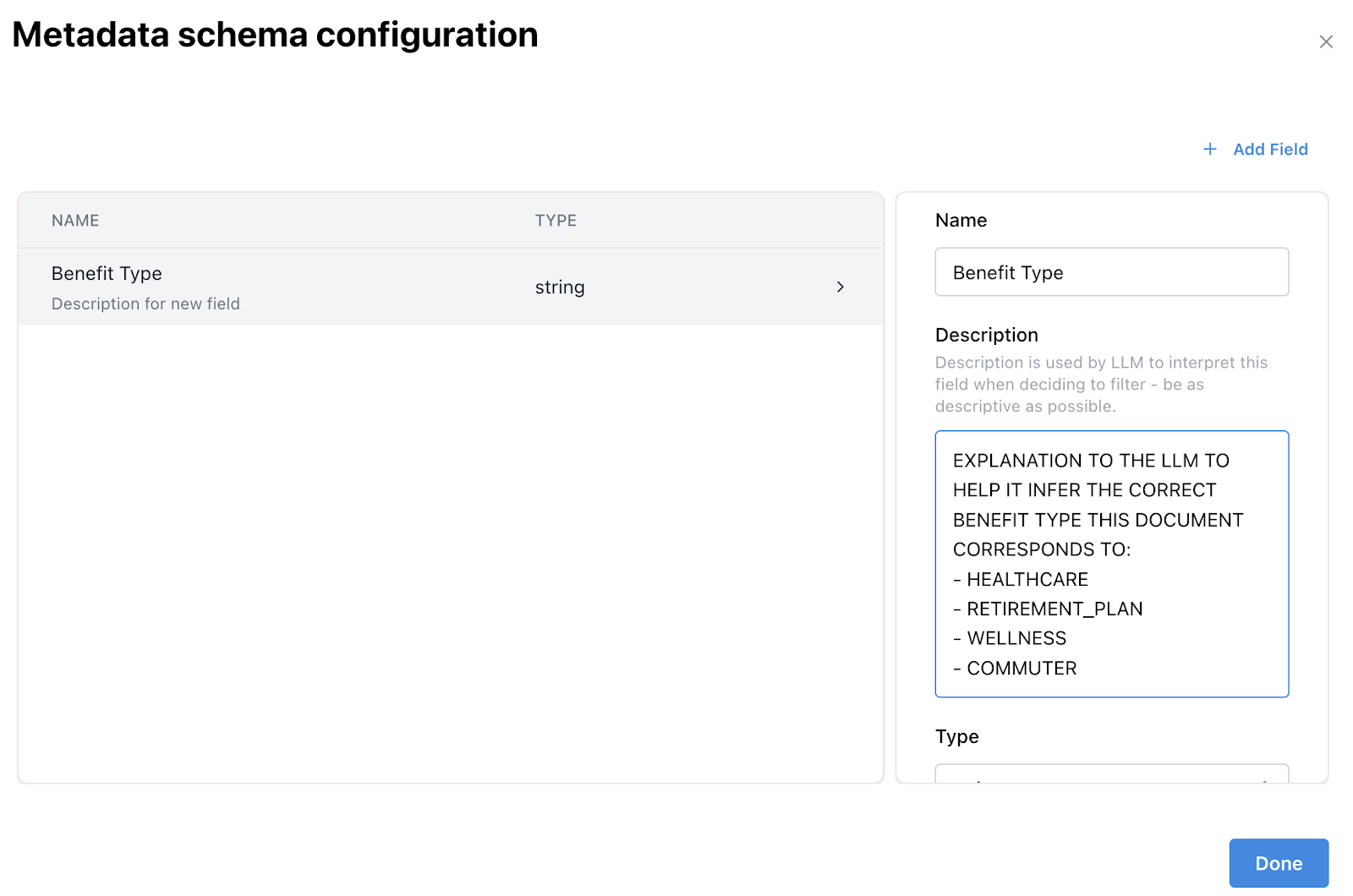

Credal supports all these behaviors out of the box, but it can take time to implement them, so if you’re building these in house, make sure you’re being thoughtful about how big of an impact each one is making. For example, we’ve seen re-ranking improve search relevancy by about 10% (measuring the frequency at which a highly relevant chunk of content is included in the top 3 results), and marginally lower improvements for hybrid search. Metadata filtering can be tremendously powerful for certain types of usecases, but requires decisions about whether the corresponding metadata should be inferred or simply provided at run time, and if inferred, there are questions about when to recalculate the metadata (every time the data is altered? Only when it is dramatically altered?). These decisions are impacted by the volume of data you would be tagging (since that tells you the cost of inferring the metadata, and cost can become prohibitive for certain usecases with hundreds of thousands of documents, changing every day or week.

The state of the art here is definitely building up an array of different signals, based on metadata you extract from the source system, full-text-search similarity, vector similarity and inferred metadata, and then applying those signals to each individual chunk of the document, which you can then use to implement a scoring system based on what was useful in historical queries to help you better understand and evaluate the relevance of each document. You might want to tune the scoring system to weight more recently updated articles (especially for something like a Healthcare plans question, where documentation typically needs annual refreshing).

At Credal, we let you both specify metadata at the time of upload, or define metadata that you want the LLM to extract from the data, using a description.

Above, you see you can provide a description of the field type, and an LLM can use that description to infer the best possible value for each given document you upload into this collection.

4. They make it easy to adopt the best AI models as they are released

One important aspect of great versions of these tools is that they are positioned to take advantage of the huge improvements in the industry over time. Since Credal was released, we've seen 17 different State of the Art models released. As these models get better over time, you want to make it really easy to switch the underlying model out to the best value for money model available to you at your Enterprise.

The way we've approached this at Credal is to give copilot creators a little dropdown, that contains just enough information for AI curious employees to configure copilots with the most relevant models for them. As new models come out, if you want to flip to the latest and greatest, you can just leave it to Credal to figure it out.

IN general, the best AI tools for each part of the stack is evolving really fast, which is why Credal is built with a highly modular architecture that allows Enterprises to plug and play their favourite Vector stores, Language and Embedding Models, Chunking strategies and more, create a truly flexible, module Generative AI platform that works not just for HR operations, but for every operational part of the Enterprise.

Once again, we see a really simple usecase for AI, turning out to be straightforward in its initial creation, but gradually getting more complex over time, until the truly awesome version, where it really feels like the Assistant deeply understands your data and can answer meaningfully, as though it were a member of your HR team - that takes much more. The amount of time you want to invest in building one of these tools will likely depend on how valuable the problem is to solve. One of the challenges with these smaller usecases is that even though they save users a lot of time, most of the benefit accrues to the HR team, who are now much more efficient and can get more work done. But the challenge is that since it may touch sensitive data, you still need to work through a complex security / onboarding process with IT that can take weeks, and therefore, a point solution can be really hard to justify.

Buying a Developer RAG Platform as SaaS that lets you solve a lot of these low hanging fruit usecases quickly, while still being flexible enough to support some of the more sophisticated, complex usecases, can often be the best of both worlds: valuable enough to make it through procurement, easy enough to use to solve a lot of these smaller usecases in a really high quality way.

Credal gives you everything you need to supercharge your business using generative AI, securely.